Unix Commands

What will you learn from this Hadoop Commands tutorial?

This hadoop mapreduce tutorial will give you a list of commonly used hadoop fs commands that can be used to manage files on a Hadoop cluster. These hadoop hdfs commands can be run on a pseudo distributed cluster or from any of the VM’s like Hortonworks, Cloudera, etc.

Pre-requisites to follow this Hadoop tutorial

- Hadoop must be installed.

- Hadoop Cluster must be configured.

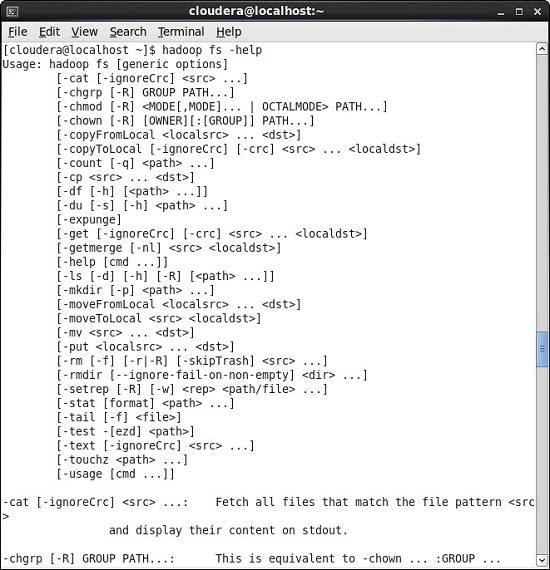

1) help HDFS Shell Command

Syntax of help hdfs Command

$ hadoop fs –help

Help hdfs shell command helps hadoop developers figure out all the available hadoop commands and how to use them.

If you would like more information about Big Data and Hadoop Certification, please click the orange "Request Info" button on top of this page.

Variations of the Hadoop fs Help Command

$ hadoop fs –help ls

Using the help command with a specific command lists the usage information along with the options to use the command.

Learn Hadoop by working on interesting Big Data and Hadoop Projects for just $9.

2) Usage HDFS Shell Command

$ hadoop fs –usage ls

Usage command gives all the options that can be used with a particular hdfs command.

3) ls HDFS Shell Command

Syntax for ls Hadoop Command -

$ hadoop fs –ls

This command will list all the available files and subdirectories under default directory.For instance, in our example the default directory for Cloudera VM is /user/cloudera

Variations of Hadoop ls Shell Command

$ hadoop fs –ls /

Returns all the available files and subdirectories present under the root directory.

$ hadoop fs –ls –R /user/cloudera

Returns all the available files and recursively lists all the subdirectories under /user/Cloudera

4) mkdir- Used to create a new directory in HDFS at a given location.

Example of HDFS mkdir Command -

$ hadoop fs –mkdir /user/cloudera/dezyre1

The above command will create a new directory named dezyre1 under the location /user/cloudera

Note : Cloudera and other hadoop distribution vendors provide /user/ directory with read/write permission to all users but other directories are available as read-only.Thus, to create a folder in the root directory, users require superuser permission as shown below -

$ sudo –u hdfs hadoop fs –mkdir /dezyre

This command will create a new directory named dezyre under the / (root directory).

5) copyFromLocal

Copy a file from local filesytem to HDFS location.

For the following examples, we will use Sample.txt file available in the /home/Cloudera location.

Example - $ hadoop fs –copyFromLocal Sample1.txt /user/cloudera/dezyre1

Copy/Upload Sample1.txt available in /home/cloudera (local default) to /user/cloudera/dezyre1 (hdfs path)

6) put –

This hadoop command uploads a single file or multiple source files from local file system to hadoop distributed file system (HDFS).

Ex - $ hadoop fs –put Sample2.txt /user/cloudera/dezyre1

Copy/Upload Sample2.txt available in /home/cloudera (local default) to /user/cloudera/dezyre1 (hdfs path)

7) moveFromLocal

This hadoop command functions similar to the put command but the source file will be deleted after copying.

Example - $ hadoop fs –moveFromLocal Sample3.txt /user/cloudera/dezyre1

Move Sample3.txt available in /home/cloudera (local default) to /user/cloudera/dezyre1 (hdfs path). Source file will be deleted after moving.

8) du

Displays the disk usage for all the files available under a given directory.

Example - $ hadoop fs –du /user/cloudera/dezyre1

9) df

Displas disk usage of current hadoop distributed file system.

Example - $ hadoop fs –df

10) Expunge

This HDFS command empties the trash by deleting all the files and directories.

Example - $ hadoop fs –expunge

11) Cat

This is similar to the cat command in Unix and displays the contents of a file.

Example - $ hadoop fs –cat /user/cloudera/dezyre1/Sample1.txt

12) cp

Copy files from one HDFS location to another HDFS location.

Example – $ hadoop fs –cp /user/cloudera/dezyre/war_and_peace /user/cloudera/dezyre1/

13) mv

Move files from one HDFS location to another HDFS location.

Example – $ hadoop fs –mv /user/cloudera/dezyre1/Sample1.txt /user/cloudera/dezyre/

14) rm

Removes the file or directory from the mentioned HDFS location.

Example – $ hadoop fs –rm -r /user/cloudera/dezyre3

rm -r

rm -r

Example – $ hadoop fs –rm -r /user/cloudera/dezyre3

Deletes or removes the directory and its content from HDFS location in a recursive manner.

Example – $ hadoop fs –rm /user/cloudera/dezyre3

Delete or remove the files from HDFS location.

15) tail

This hadoop command will show the last kilobyte of the file to stdout.

Example – $ hadoop fs -tail /user/cloudera/dezyre/war_and_peace

Example – $ hadoop fs -tail –f /user/cloudera/dezyre/war_and_peace

Using the tail commands with -f option, shows the last kilobyte of the file from end in a page wise format.

16) copyToLocal

Copies the files to the local filesystem . This is similar to hadoop fs -get command but in this case the destination location msut be a local file reference

Example - $ hadoop fs –copyFromLocal /user/cloudera/dezyre1/Sample1.txt /home/cloudera/hdfs_bkp/

Copy/Download Sample1.txt available in /user/cloudera/dezyre1 (hdfs path) to /home/cloudera/hdfs_bkp/ (local path)

17) get

Downloads or Copies the files to the local filesystem.

Example - $ hadoop fs –get /user/cloudera/dezyre1/Sample2.txt /home/cloudera/hdfs_bkp/

Copy/Download Sample2.txt available in /user/cloudera/dezyre1 (hdfs path) to /home/cloudera/hdfs_bkp/ (local path)

18) touchz

Used to create an emplty file at the specified location.

Example - $ hadoop fs –touchz /user/cloudera/dezyre1/Sample4.txt

It will create a new empty file Sample4.txt in /user/cloudera/dezyre1/ (hdfs path)

19) setrep

This hadoop fs command is used to set the replication for a specific file.

Example - $ hadoop fs –setrep –w 1 /user/cloudera/dezyre1/Sample1.txt

It will set the replication factor of Sample1.txt to 1

20) chgrp

This hadoop command is basically used to change the group name.

Example - $ sudo –u hdfs hadoop fs –chgrp –R cloudera /dezyre

It will change the /dezyre directory group membership from supergroup to cloudera (To perform this operation superuser permission is required)

21) chown

This command lets you change both the owner and group name simulataneously.

Example - $ sudo –u hdfs hadoop fs –chown –R cloudera /dezyre

It will change the /dezyre directory ownership from hdfs user to cloudera user (To perform this operation superuser is permission required)

22) hadoop chmod

Used to change the permissions of a given file/dir.

Example - $ hadoop fs –chmod /dezyre

It will change the /dezyre directory permission to 700 (drwx------).

Note : hadoop chmod 777

To execute this , the user must be the owner of the file or must be a super user. On executing this command, all users will get read,write and execute permission on the file.

-----------------------

http://bigdataplanet.info/p/what-is-big-data.html

Here http is the scheme, bigdataplanet is authority and /p is the path and this path is pointing to the article what-is-big-data.html

Similarly For HDFS the scheme is hdfs, and for the local filesystem the scheme is file.

Here the scheme and authority is optional because it will take the default we have put in the Configuration file.

So lets get started and see the commands:

mkdir

Create directory in the given path:

hadoop fs -mkdir <paths>

Ex: hadoop fs -mkdir /user/deepak/dir1

ls

List the file for the given path:

hadoop fs -ls <args>

Ex: hadoop fs -ls /user/deepak

lsr

Similar to lsr in Unix shell.

hadoop fs -lsr <args>

Ex: hadoop fs -lsr /user/deepak

touchz

Creates file in the given path:

hadoop fs -touchz <path[filename]>

Ex: hadoop fs -touchz /user/deepak/dir1/abc.txt

cat

Same as unix cat command:

hadoop fs -cat <path[filename]>

Ex: hadoop fs -cat /user/deepak/dir1/abc.txt

cp

Copy files from source to destination. This command allows multiple sources as well in which case the destination must be a directory.

hadoop fs -cp <source> <dest>

Ex: hadoop fs -cp /user/deepak/dir1/abc.txt /user/deepak/dir2

put

Copy single src, or multiple srcs from local file system to the destination filesystem.Also reads input from stdin and writes to destination filesystem.

hadoop fs -put <source:localFile> <destination>

Ex: hadoop fs -put /home/hduser/def.txt /user/deepak/dir1

get

Copy files to the local file system

hadoop fs -get <source> <dest:localFileSystem>

Ex: hadoop fs -get /user/deepak/dir1 /home/hduser/def.txt

copyFromLocal

Similar to put except that the source is limited to local files.

hadoop fs -copyFromLocal <src:localFileSystem> <dest:Hdfs>

Ex: hadoop fs -put /home/hduser/def.txt /user/deepak/dir1

copyToLocal

Similar to get except that the destination is limited to local files.

hadoop fs -copyToLocal <src:Hdfs> <dest:localFileSystem>

Ex: hadoop fs -get /user/deepak/dir1 /home/hduser/def.txt

mv

Move file from source to destination. Except moving files across filesystem is not permitted.

hadoop fs -mv <src> <dest>

Ex: hadoop fs -mv /user/deepak/dir1/abc.txt /user/deepak/dir2

rm

Remove files specified as argument. Deletes directory only when it is empty

hadoop fs -rm <arg>

Ex: hadoop fs -rm /user/deepak/dir1/abc.txt

rmr

Recursive version of delete.

hadoop fs -rmr <arg>

Ex: hadoop fs -rmr /user/deepak/

stat

Retruns the stat inofrmation on the path.

hadoop fs -stat <path>

Ex: hadoop fs -stat /user/deepak/dir1

tail

Similar to tail in Unix Command.

hadoop fs -tail <path[filename]>

Ex: hadoop fs -tail /user/deepak/dir1/abc.txt

test

Test comes with the following options:

-e check to see if the file exists. Return 0 if true.

-z check to see if the file is zero length. Return 0 if true

-d check return 1 if the path is directory else return 0.

hadoop fs -test -[ezd]<path>

Ex: hadoop fs -test -e /user/deepak/dir1/abc.txt

text

Takes a source file and outputs the file in text format. The allowed formats are zip and TextRecordInputStream.

hadoop fs -text <src>

Ex: hadoop fs -text /user/deepak/dir1/abc.txt

du

Display the aggregate length of a file.

hadoop fs -du <path>

Ex: hadoop fs -du /user/deepak/dir1/abc.txt

dus

Displays the summary of a file length.

hadoop fs -dus <args>

Ex: hadoop fs -dus /user/deepak/dir1/abc.txt

expung

Empty the trash.

hadoop fs -expunge

chgrp

Change group association of files. With -R, make the change recursively through the directory structure. The user must be the owner of files, or else a super-user.

hadoop fs -chgrp [-R] GROUP <path>

chmod

Change the permissions of files. With -R, make the change recursively through the directory structure. The user must be the owner of the file, or else a super-user.

hadoop fs -chmod [-R] <MODE[,MODE] | OCTALMODE> <path>

chown

Change the owner of files. With -R, make the change recursively through the directory structure. The user must be a super-user.

hadoop fs -chown [-R] [OWNER][:[GROUP]] <path>

getmerge

Takes a source directory and a destination file as input and concatenates files in src into the destination local file. Optionally addnl can be set to enable adding a newline character at the end of each file.

hadoop fs -getmerge <src> <localdst> [addnl]

setrep

Changes the replication factor of a file. -R option is for recursively increasing the replication factor of files within a directory.

hadoop fs -setrep [-R] <path>

Comments

Post a Comment